Knowledge Systems in the Intelligence Age

Where humans and AI fit into knowledge work

What will AI look like in the future? There’s this notion that in the last couple centuries, humans have gone from the industrial age to the information age. I think that we’ve arrived at the end of the information age and are entering the intelligence age.

– Paraphrased from Karl Friston’s interview on Machine Learning Street Talk

The abundance of information via the internet was the hallmark of the information age and the abundance of intelligence via generative AI capable of processing information will be the hallmark of the intelligence age. Humans are no longer the only ones with the intelligence needed to reason with ambiguity. We are beginning to lean on generative AI to handle the ambiguity that comes with knowledge work and that trend will only accelerate.

Last time, we examined why generative AI is primed for processing information. In this post, we’ll explore a framework to help you introspect your own knowledge workflows and identify where AI and humans are needed.

A Knowledge System

Knowledge work is frequently distinguished from other types of work as "non-routine." This implies that knowledge work revolves around resolving the ambiguity of the non-routine. To address ambiguity effectively, it’s important to adopt systems thinking.

As advocated by Donella Meadows in her seminal work, Thinking in Systems: A Primer, by using systems thinking, we create robust and adaptable structures that evolve with the ever-changing current of information inputs.

By the end of this post we’ll have a clear understanding of the four steps and the transitions outlined above that constitute a knowledge system.

TL;DR

AI can augment each of the transitions between and within states

Knowledge systems are complex and transformer-based LLMs (like GPT) currently don’t have enough context length to reliably comprehend and improve the overall system

Unlike AI, humans will have a holistic view of these knowledge systems and will continue to have a role in architecting, operating, and optimizing systems

1/ Index

What's happening

The first step involves capturing external information from the world and organizing it into a useful state.

Capture is the extraction of information from the external environment. For a company, this may entail updating its database based on incoming transactions, while for an individual, it could involve browsing through new emails and retaining new information.

Organization is the application of a schema to process and refine the captured information into knowledge. Without this step, we would be left with raw information in need of shaping before it can be used for planning.

Where AI fits in

Capture: Prior to generative AI, information was already being captured but in an indiscriminate way. We’ve had microphones, cameras, and web crawlers for years but raw information can now be parsed out on-demand with AI enhanced capture such as OpenAI’s Whisper for audio and Meta’s Segment Anything Model for visuals.

Organize: AI that understands natural language means a new crop of indexing systems will pop up that will make use of all the text that humans don’t want to read themselves. A perfect example of this is how modern embeddings and vector databases have enabled the development of ‘chatbots over internal documents’ products such as libraria.dev, kili.so, and berri.ai.

Where humans shine

People will continue to be critical in deciding how to capture and organize. With these new capabilities, we will need more humans to help guide AI to ask the right questions, collect the right information, and implement the right schemas to convert information into knowledge.

2/ Plan

What's happening

In this next step, a trigger is set off by a combination of recently captured information and some policy within the knowledge base. This initiates a retrieval process where relevant facts and knowledge are rounded up and synthesized into a workable plan, the output of this step. Think of the plan as a blueprint or a specification for an action.

Where AI fits in

Trigger: AI is already used to trigger plenty of workflows such as monitoring billions of payments for fraud. Having AI trigger workflows will become more common as you no longer need the economies of scale of large companies to kickoff workflows with generative AI.

An example of this in practice is introducing AI to a Zapier workflow. Instead of defining one hundred email rules, you can lean on AI to trigger the appropriate next step based on its interpretation of an incoming email.

Retrieve: Embeddings generated by AI can not only help you organize information but can also help you fetch information. Depending on the information processed by the trigger, a set of embeddings can be recalled from the knowledge base and assembled into a plan. In an instant, AI is able to interpret incoming information, process it as a trigger, and recall relevant knowledge.

Where humans shine

Central to this step are the policies that are informing the trigger and the retrieval. While advanced AI may set policies in the future, I suspect our current class of transformer-based LLMs will fall short because of their limited context length and inability see the big picture. Given this, humans are still uniquely positioned to be an orchestrator and a policy maker when it comes to the Plan step.

3/ Act

What's happening

This is perhaps the most straight forward step. In fact, much of the work that humans have traditionally performed in this step have already been delegated to machines. Just like how “farmers” refers to people who farm, “computers” used to refer to people who compute. In both lines of work machines have replaced humans at incredible scales. In 1900, farms employed 41% of the US workforce, whereas in 2021, the percentage dropped to slightly over 1%. The reason why “computers” no longer refer to humans is probably because the number of “computers” in the workforce has dropped to zero. The market for artisanal organic calculations is grim.

Despite the widespread use of machines for computing, there’s plenty of knowledge work that requires human intervention, particularly when it comes to handling the nuances of plans and actions.

Executing plans often require judgment when interpreting plans, determining the actions, identifying the appropriate tools, and generating inputs for those tools. Examples in the workplace include a programmer taking requirements and building a feature around a particular API call or a marketer who translates marketing plans into ad campaigns and content.

Where AI fits in

Execute: OpenAI with ChatGPT plugins are staking a claim to this Act step by providing ChatGPT with a marketplace of APIs to interact with. As Packy McCormick pointed out in APIs All the Way Down, many software companies rely on a host of APIs to deliver their product or service. With AI, APIs can be called using fuzzy logic instead of explicitly programming for every scenario facilitating plan execution.

Where humans shine

Plugins, agents, and models will still need to be built. Once built, they’ll need optimizing. I believe the unique AI scaling challenge in this step lies with the routing problem. As more APIs and tools are made available to AI, it will have trouble deciding which ones to pick.

Smoothing out the operations of this execution step will require human effort, as AI recursively calling AI for help can be incredibly inefficient and lead to local maximas. An AI might iterate a thousand times use a finicky tool properly but a human might have the insight after the tenth try to realize that an alternative approach might be better.

As with the previous steps, the theme here is that humans are able to have a holistic view of the system and break out of ruts more easily than AI.

4/ Update

What’s happening

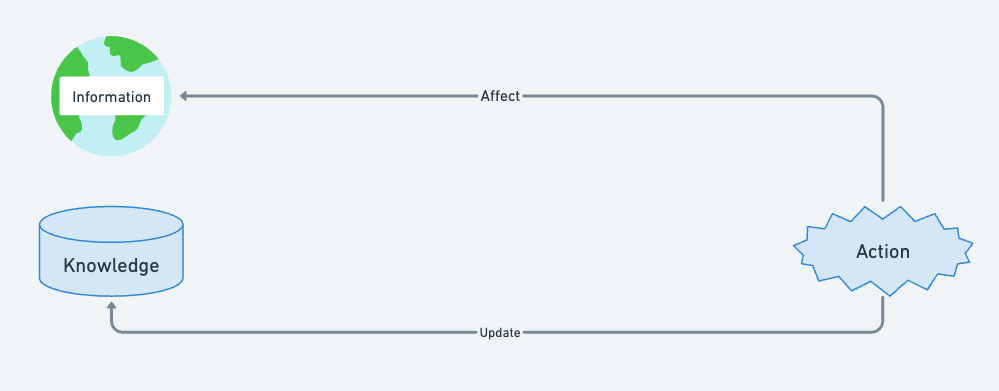

Finally, an action has consequences that affect the external world and feedback that updates the internal system.

For instance, an action could send a purchase order to a supplier and simultaneously update a row in the database indicating an order has been made. Given the expected inventory, this knowledge base may then trigger new plans and actions such as scheduling manufacturing on the factory floor.

The feedback generated in this step is what makes systems resilient and adaptive. Collecting feedback and incorporating that into the system as knowledge is what knowledge systems do.

Where AI fits in

Update: Just like how AI can enrich capture, AI can also enrich internal updates by synthesizing the immediate feedback from an action and triggering new workflows. Recommendation algorithms like those that power TikTok use the update-driven feedback loop by registering how long a person has lingered on each video.

Previously, only big companies with economies of scale could use AI in update-driven feedback loops; however, with the growing accessibility of generative AI, virtually any business or individual can do the same.

Affect: With the widespread availability of generative AI, we can expect an acceleration of AI-driven actions that shape our world. The 2010s showed us how recommendations algorithms can affect the public discourse which in turn informs the kind of content that gets created. The new content then fed back into the algorithms, influencing creators to produce new content that continues to shape pop culture. This affect-driven feedback loop becomes increasingly efficient and powerful as AI advances, shortening the time it takes to perpetuate this cycle.

Where humans shine

The acceleration of feedback loops is a double-edged sword. Recommendation algorithms of the last decade are a perfect example of how unchecked feedback loops can quickly polarize society. Balancing feedback loops are what make a system resilient if set up correctly but reinforcing feedback loops also cause decay when cycles reinforce unintended behavior.

It’s critical for humans to understand the implications of feedback loops beyond first-order effects as they are key leverage points within systems that require monitoring and intervention.

Everything Everywhere All at Once

In practice, this framework is most relevant for builders and managers of knowledge systems. Noah Smith discusses in his essay, The third magic, that AI can be viewed as a way to control outcomes without understanding.

While the essay speaks to how AI enables this “third magic”, I would add that this “third magic” of controlling outcomes without understanding has always been the case with humans. As an end-user of both human powered and AI powered knowledge systems, you place trust in the system itself to achieve your outcome. To end-users of knowledge systems, the system is magic.

The implications of including AI in knowledge systems is that AI will drastically reduce the costs of knowledge work. Products and services that were exclusive to the elite who can afford copious amounts of human labor will be broadly available.

The 20th century saw an increase in the use of household appliances, which could serve as a comparison of the time-saving benefits that a world powered by AI-based knowledge work could bring. Mondays used to be laundry day in the US where backbreaking labor could take all day. A load of laundry now takes 30 to 45 minutes to wash by machines.

How to build a useful knowledge system

The last thought I’ll leave you with is that robust knowledge systems are composed of knowledge subsystems. When each step and state transition is powered by one or more other knowledge systems, you’ll have a more flexible system. This is why humans have historically been the best choice as knowledge system components. We are sophisticated knowledge systems in our own right because of our ability to index long-term memory, plan, act, and reflect on system updates.

In recent weeks, the internet has gone crazy for AutoGPT and BabyAGI which are early AI agent powered knowledge systems. Despite the hype, these systems are not as resilient or adaptive as humans and often don’t accomplish the open-ended knowledge tasks that are assigned to them. This means we aren’t going to see knowledge systems exclusively powered by AI right away. Instead, we can expect to see some of these AutoGPT systems powering specific steps in a hybrid knowledge system with human and AI components.

As a builder, manager, or individual contributor trying to make sense of how to harness AI in your work, the trick is to start with narrow scopes in domains that you understand well. Map out the knowledge systems that you spend the most time on and experiment with delegating parts of your knowledge work to AI.

Looking back at the laundry analogy, it technically isn’t completely automated yet. People still have to gather laundry across their homes, load the machine, and start the wash. But by and large, machines have reduced the laundry problem from a whole day affair to a quick chore by automating the most labor intensive step. You can now do the same with your knowledge intensive work.

👍